Rate Limiting¶

The unrestricted resource consumption is included in the OWASP API Top 10 2023 list of most serious API security risks. Lack of rate limiting is one of the main causes of this risk. Without proper rate limiting measures, APIs are vulnerable to attacks such as denial-of-service (DoS), brute force and API overuse. This article explains how to safeguard your API and users with the Wallarm's rate limit regulation rule.

Wallarm provides the Advanced rate limiting rule to help prevent excessive traffic to your API. This rule enables you to specify the maximum number of connections that can be made to a particular scope, while also ensuring that incoming requests are evenly distributed. If a request exceeds the defined limit, Wallarm rejects it and returns the code you selected in the rule.

Wallarm examines various request parameters such as cookies or JSON fields, which allows you to limit connections based not only on the source IP address, but also on session identifiers, usernames, or email addresses. This additional level of granularity enables you to enhance the overall security of a platform based on any origin data.

Note that rate limiting described in this article is one of the ways for the load control provided by Wallarm - alternatively, you can apply brute force protection. Use rate limiting for slowing down the incoming traffic and brute-force protection to completely block the attacker.

Creating and applying the rule¶

To set and apply rate limit:

-

Proceed to Wallarm Console:

- Rules → Add rule or your branch → Add rule.

- Attacks / Incidents → attack/incident → hit → Rule.

- API Discovery (if enabled) → your endpoint → Create rule.

-

Choose Mitigation controls → Advanced rate limiting.

-

In If request is, describe the scope to apply the rule to.

-

Set a desired limit for connections to your scope:

- Maximum number for the requests per second or minute.

-

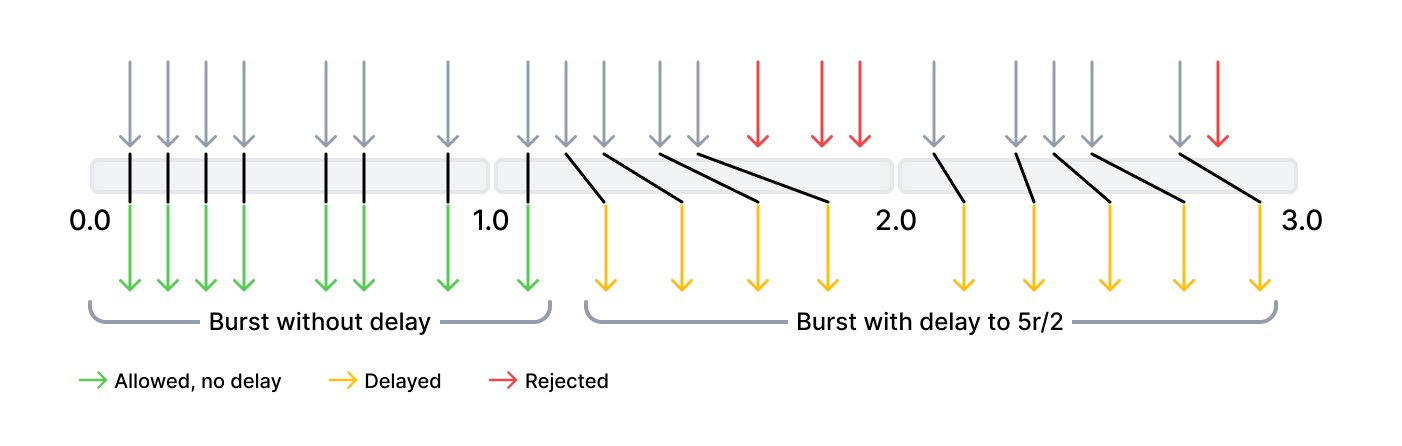

Burst - maximum number of excessive requests to be buffered once the specified RPS/RPM is exceeded and to be processed once the rate is back to normal.

0by default.If the value is different from

0, you can control whether to keep the defined RPS/RPM between buffered excessive requests execution.No delay points to simultaneous processing of all buffered excessive requests, without the rate limit delay. Delay implies simultaneous processing of the specified number of excessive requests, others are processed with delay set in RPS/RPM.

-

Response code - code to return in response to rejected requests.

503by default.Below is the example of rate limiting behavior with limit of 5 r/s, burst 12 and delay 8.

The first 8 requests (the value of delay) are transferred by Wallarm node without delay. The next 4 requests (burst - delay) are delayed so that the defined rate of 5 r/s is not exceeded. The next 3 requests are rejected because the total burst size has been exceeded. Subsequent requests are delayed.

-

In In this part of request, specify request points for which you wish to set limits. Wallarm will restrict requests that have the same values for the selected request parameters.

All available points are described here, you can choose those matching your particular use case, e.g.:

remote_addrto limit connections by origin IPjson→json_doc→hash→api_keyto limit connections by theapi_keyJSON body parameter

Restrictions on the value length

The maximum allowed length of parameter values by which you measure limits is 8000 symbols.

-

Wait for the rule compilation and uploading to the filtering node to complete.

Rule examples¶

Limiting connections by IP to ensure high API availability¶

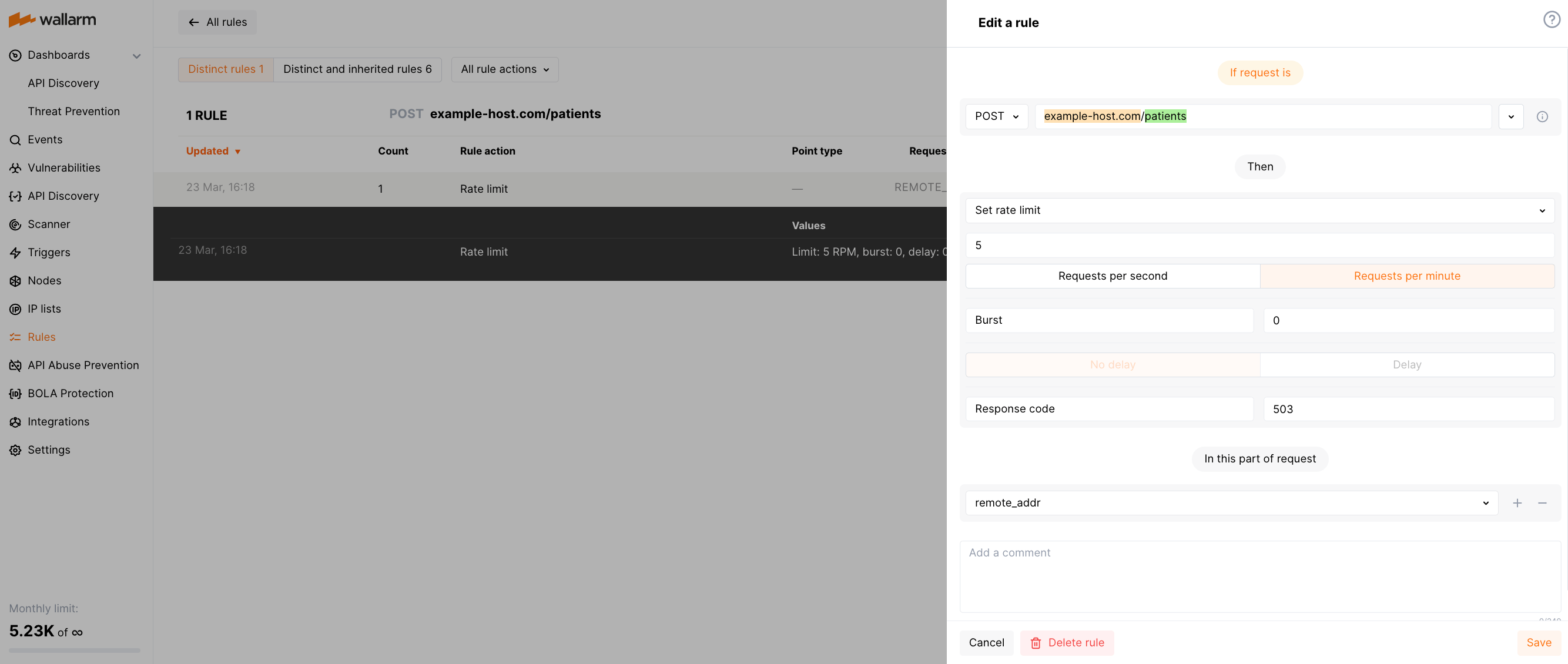

Suppose a healthcare company's REST API letting doctors to submit patient information through a POST request to the /patients endpoint of the https://example-host.com host. Accessibility of this endpoint is critically important, and thus it should not be overwhelmed by a large number of requests.

Limiting connections by IP within a certain period of time specifically for the /patients endpoint could prevent this. This ensures the stability and availability of the endpoint to all doctors, while also protecting the security of patient information by preventing DoS attacks.

For example, limit can be set to 5 POST requests per minute for each IP address as follows:

Limiting connections by sessions to prevent brute force attacks on auth parameters¶

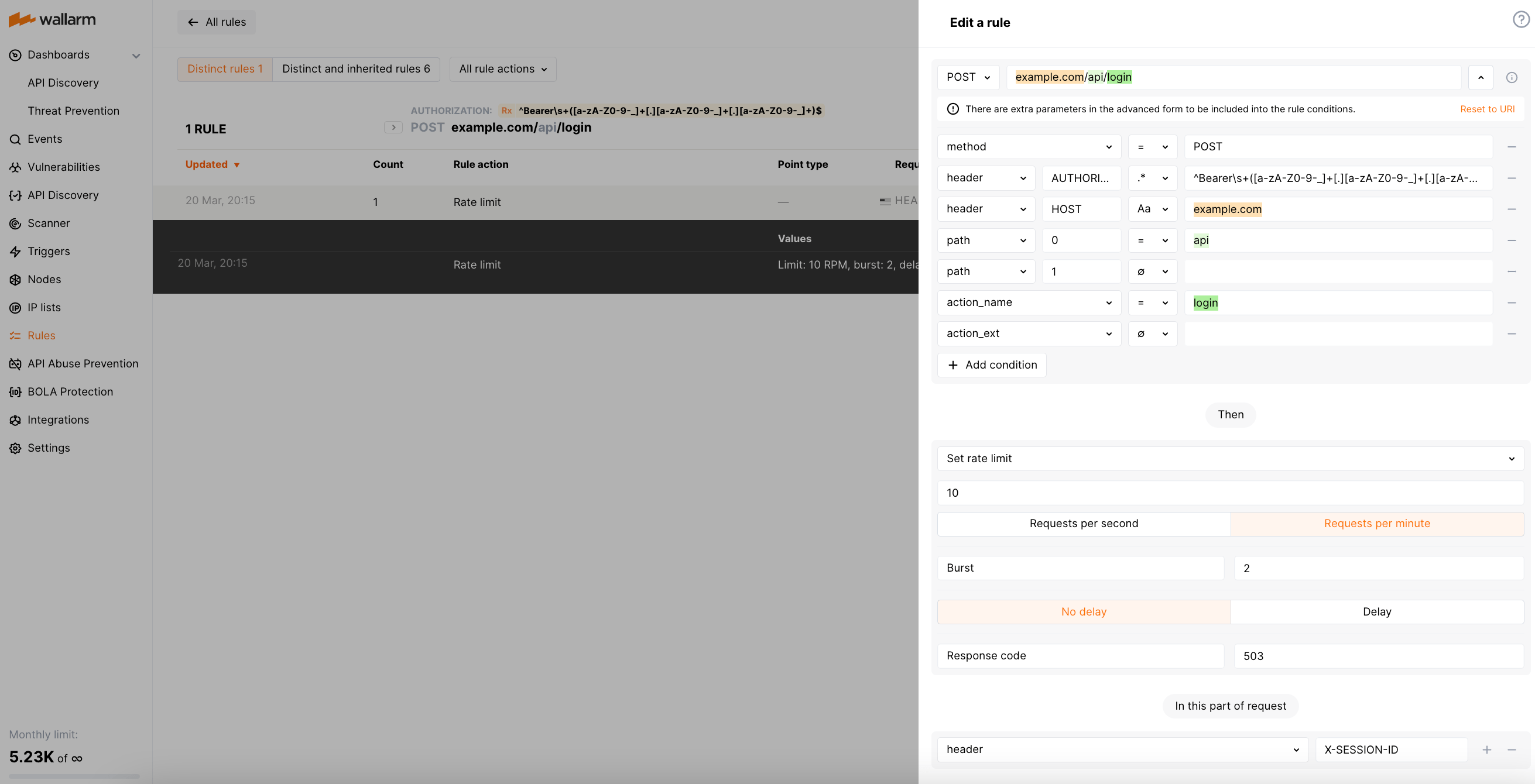

By applying rate limiting to user sessions, you can restrict brute force attempts to find real JWTs or other authentication parameters in order to gain unauthorized access to protected resources. For example, if rate limit is set to allow only 10 requests per minute under a session, an attacker attempting to discover a valid JWT by making multiple requests with different token values will quickly hit the rate limit, and their requests will be rejected until the rate limit period expires.

Suppose your application assigns each user session with a unique ID and reflects it in the X-SESSION-ID header. The API endpoint at the URL https://example.com/api/login accepts POST requests that include a Bearer JWT in the Authorization header. For this scenario, the rule limiting connections by sessions will appear as follows:

The regexp used for the Authorization value is `^Bearer\s+([a-zA-Z0-9-_]+[.][a-zA-Z0-9-_]+[.][a-zA-Z0-9-_]+)$.

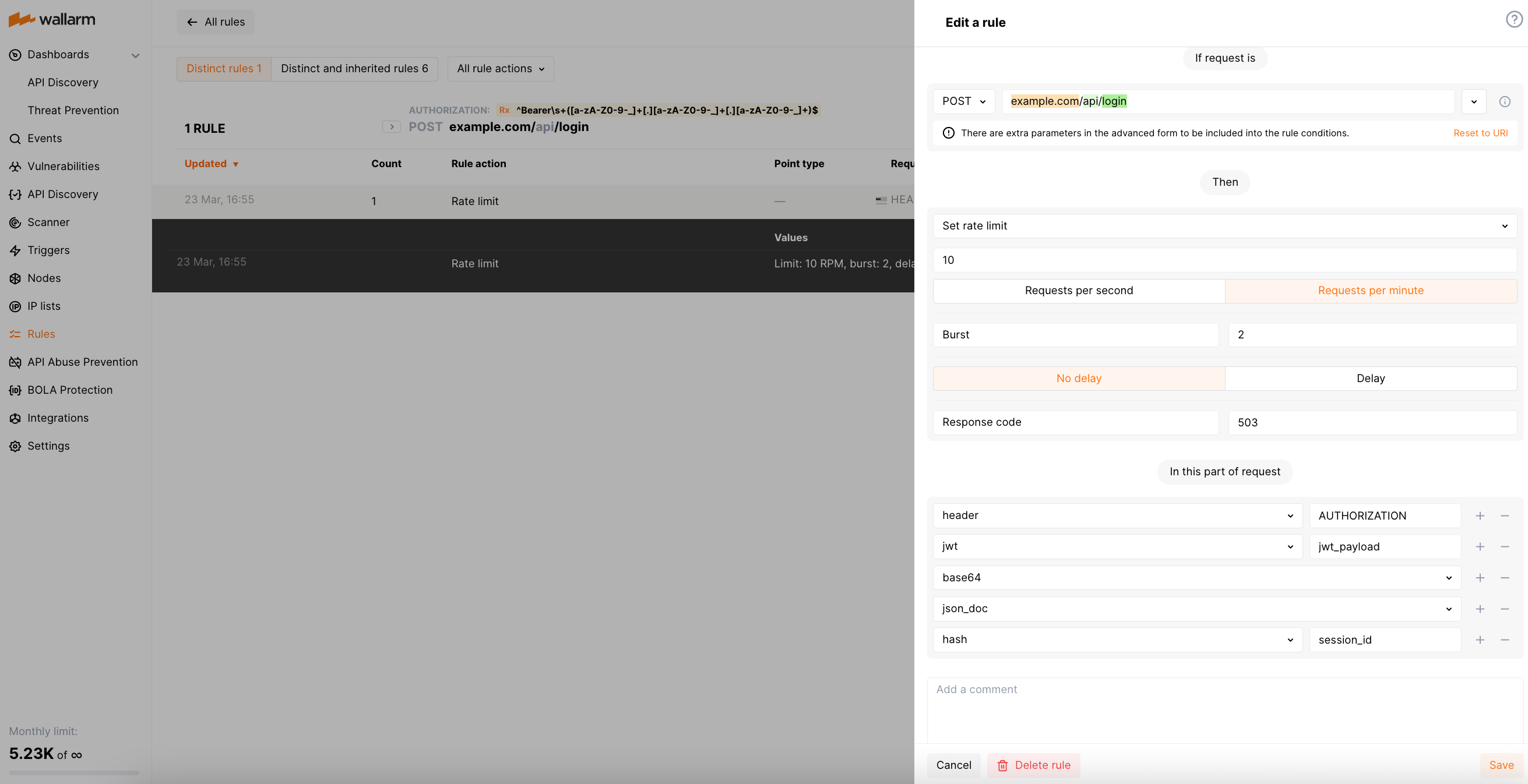

If you use JWT (JSON Web Tokens) to manage user sessions, you can adjust the rule to decrypt the JWT and extract the session ID from its payload as follows:

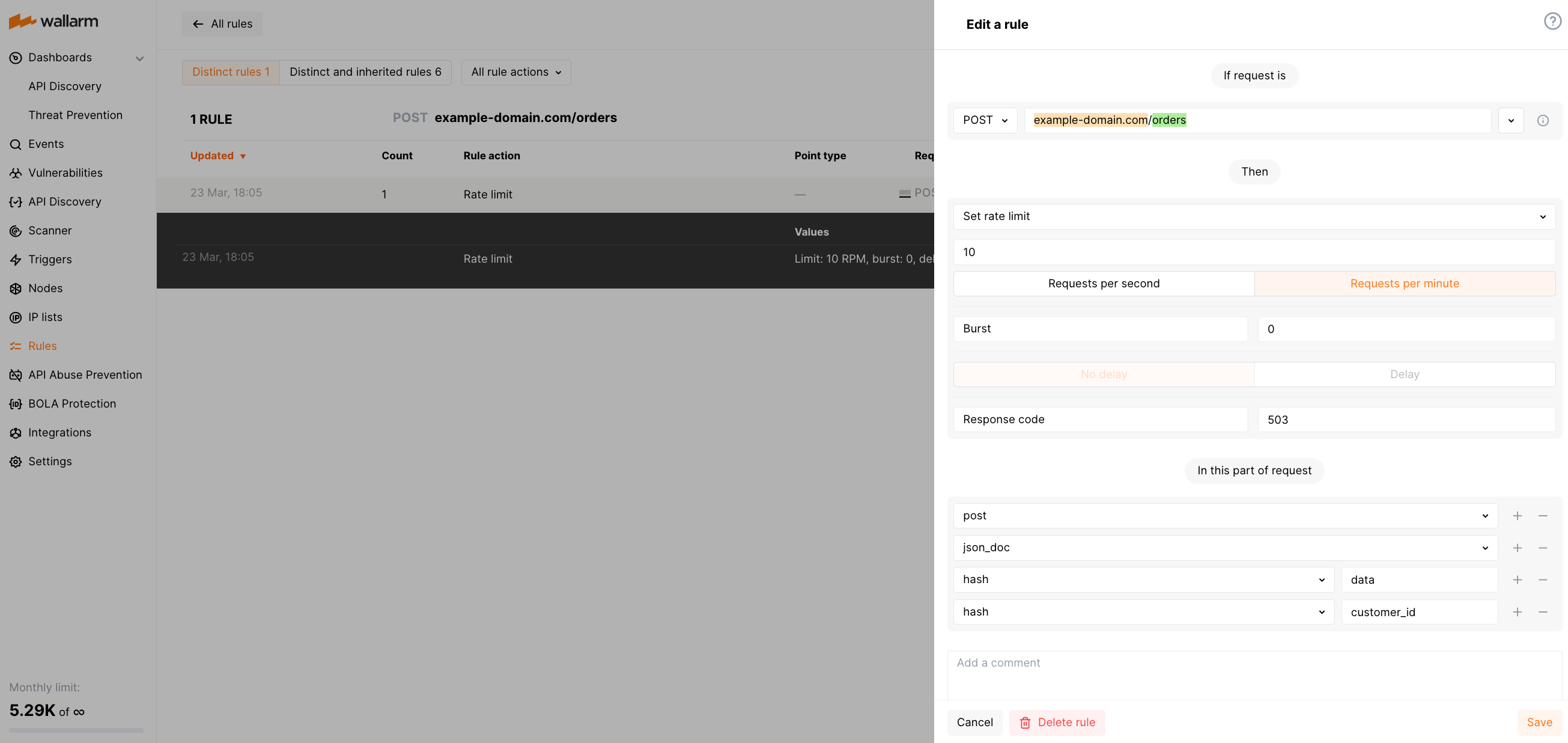

Limiting connections by customer IDs to prevent server overwhelm¶

Let us consider a web service that provides access to customer orders data for an online shopping platform. Rate limiting by customer ID can help prevent customers from placing too many orders in a short period of time, which can put a strain on inventory management and order fulfillment.

For example, the rule limiting each customer by 10 POST requests per minute to https://example-domain.com/orders may look as below. This example considers a customer ID is passed in the data.customer_id JSON body object.

Limitations and peculiarities¶

The rate limit functionality has the following limitations and peculiarities:

-

Rate limiting rule is supported by all Wallarm deployment forms except for:

- OOB Wallarm deployment

- MuleSoft, Amazon CloudFront, Cloudflare, Broadcom Layer7 API Gateway, Fastly connectors

-

The maximum allowed length of parameter values by which you measure limits is 8000 symbols.

-

If you have multiple Wallarm nodes and the incoming traffic on each node meets the rate limit rule, they are limited independently.

-

When multiple rate limit rules apply to incoming requests, the rule with the lowest rate limit is used to limit the requests.

-

If an incoming request does not have the point specified in the In this part of request rule section, then this rule is not applied as a limitation for that request.

-

If your web server is configured to limit connections (e.g. by using the

ngx_http_limit_req_moduleNGINX module) and you also apply the Wallarm rule, the web server rejects requests by the configured rules but Wallarm does not. -

Wallarm does not save requests exceeding the rate limit, it only rejects them by returning the code chosen in the rule. The exception are requests with attack signs - they are recorded by Wallarm even if they are rejected by the rate limiting rule.

Difference with rate abuse protection¶

For restricting resource consumption and preventing attacks using massive amounts of requests, besides described rate limiting, Wallarm provides the rate abuse protection.

The rate limiting delays some requests if rate is too high (puts to buffer) and rejects the remaining when buffer is full, when rate is back to normal, buffered requests are delivered, no blocking is applied by IP or session while rate abuse protection blocks attackers by their IPs or sessions for some time.