Wallarm Connector for Apigee API Management¶

This guide describes how to secure your APIs managed by Apigee API Management (APIM) using the Wallarm connector.

Overview¶

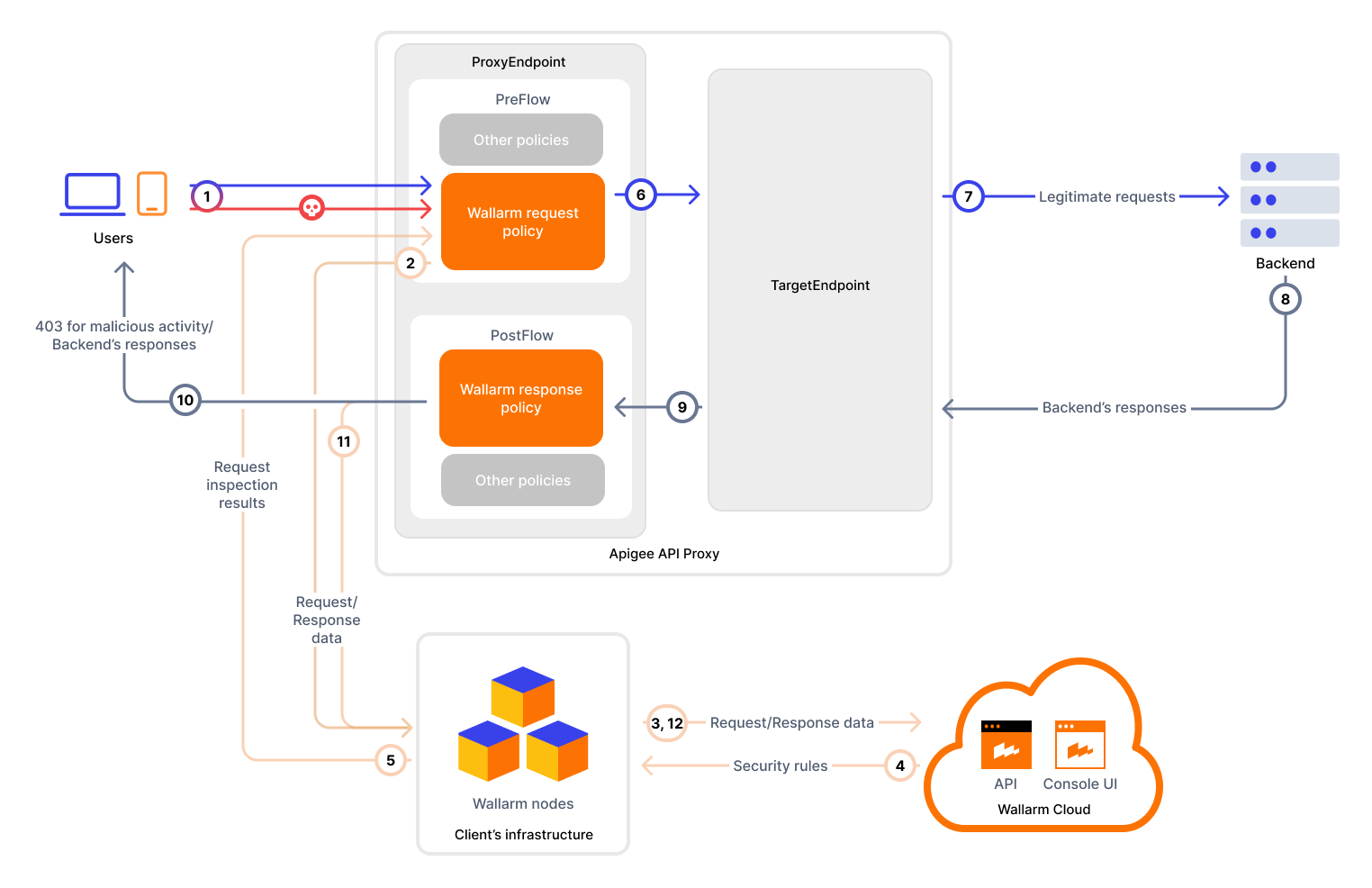

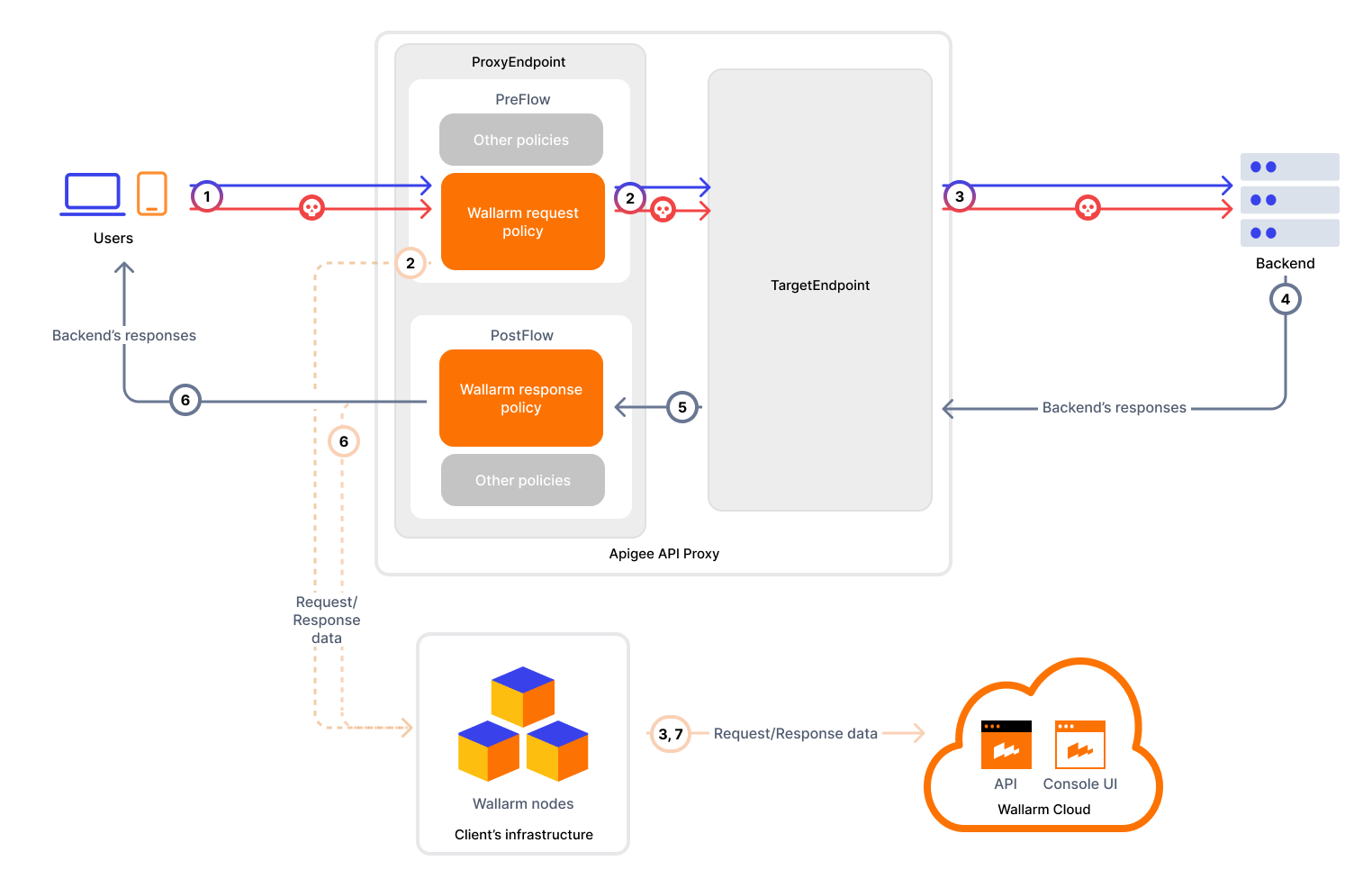

To use Wallarm as a connector for Apigee APIM, you need to deploy the Wallarm Node externally and apply the Wallarm-provided shared flows in Apigee to route traffic to the Wallarm Node for analysis.

The Wallarm connector for Apigee APIM supports the synchronous and asynchronous traffic analysis:

In synchronous (inline) mode, the policy intercepts requests and sends them to the Wallarm Node for inspection. Based on the Node's filtration mode, malicious requests may be blocked with 403, providing real-time threat mitigation.

In asynchronous (out-of-band) mode, traffic is mirrored to the Node without affecting the original flow. Malicious requests are logged in Wallarm Console but not blocked.

Use cases¶

This solution is recommended for securing APIs managed by the Apigee APIM service.

Limitations¶

-

Custom blocking code configuration is not supported.

All blocked malicious traffic is returned with status code

403by default. You can customize the block page content, but not the response code itself. -

Rate limiting by Wallarm rules is not supported.

Rate limiting cannot be enforced on the Wallarm side for this connector. If rate limiting is required, use Apigee policies.

-

Multitenancy is not supported on Security Edge hosting, but it is supported for self-hosted nodes deployed with the connector.

Requirements¶

Before deployment, ensure the following prerequisites are met:

-

Familiarity with Apigee API Management.

-

APIs published via Apigee. Supported distributions:

- Apigee OPDK

- Apigee Edge

- Apigee X and Hybrid

-

A user with the Environment Admin or Org Admin role in Apigee.

-

Google Cloud SDK (gcloud CLI) installed and configured (if managing Apigee from CLI).

-

Access to the Administrator account in Wallarm Console for the US Cloud or EU Cloud.

-

Native Node version 0.18.0 or higher.

-

A valid trusted SSL/TLS certificate for the Wallarm Node domain (self-signed certificates are not supported).

Deployment¶

This guide shows deployment primarly via the Google Cloud Console and Apigee REST API. For automation, use the Apigee Terraform provider, or refer to the full Apigee API reference.

1. Deploy a Wallarm Node¶

The Wallarm Node is a core component of the Wallarm platform that you need to deploy. It inspects incoming traffic, detects malicious activities, and can be configured to mitigate threats.

You can deploy it either hosted by Wallarm or in your own infrastructure, depending on the level of control you require.

To deploy a Wallarm-hosted node for the connector, follow the instructions.

Choose an artifact for a self-hosted node deployment and follow the attached instructions:

- All-in-one installer for Linux infrastructures on bare metal or VMs

- Docker image for environments that use containerized deployments

- Helm chart for infrastructures utilizing Kubernetes

Required Node version

Please note that the Apigee APIM connector is supported only by the Native Node version 0.18.0+.

2. Obtain the connector code bundle¶

Contact sales@wallarm.com to get the Apigee connector code bundle.

The bundle contains:

-

Wallarm shared flow bundles to deploy in Apigee:

Wallarm-Sync-Request-Flow.zipandWallarm-Sync-Response-Flow.zipfor synchronous analysisWallarm-Async-Request-Flow.zipandWallarm-Async-Response-Flow.zipfor asynchronous analysis

-

Sample, ready-to-use API proxies you can modify and reuse:

wallarm-single-proxy-sync- sample proxy with the Wallarm connector policies preconfigured for synchronous analysiswallarm-single-async-proxy- sample proxy with the Wallarm connector policies preconfigured for asynchronous analysis

While this guide walks you through deployment from scratch, these samples can serve as a shortcut or reference.

To run the sample proxies, you must also create the

WallarmConfigKVM in the target environment and deploy the corresponding shared flows.

3. Create a key value map in Apigee¶

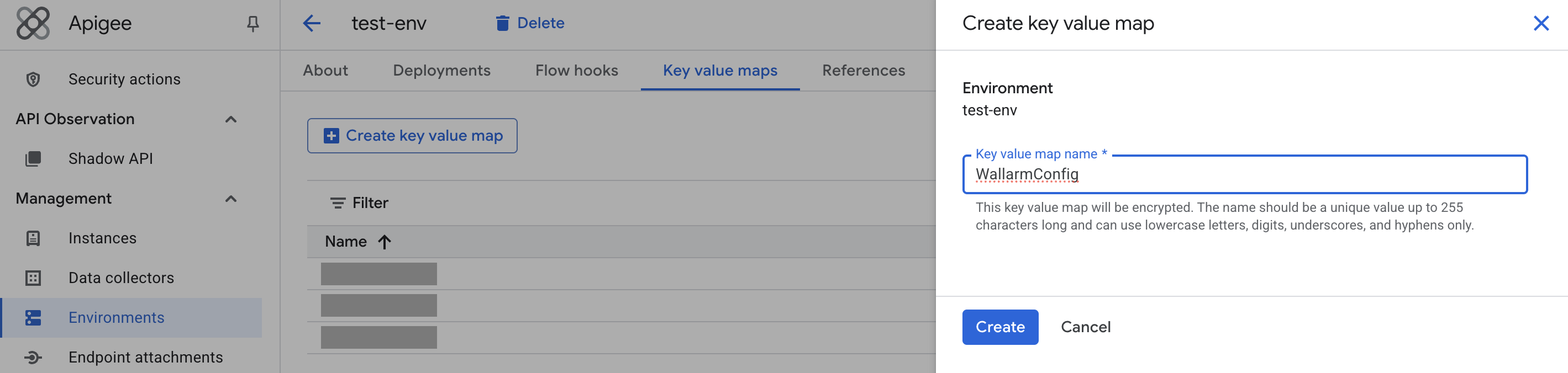

Define the WallarmConfig key value map (KVM) to store Wallarm connector configuration. Using a KVM allows you to change parameters without modifying policy code.

-

Create the

WallarmConfigKVM:Use the following Apigee API call to create a KVM at the environment level:

curl -X POST \ -H "Authorization: Bearer $(gcloud auth print-access-token)" \ -H "Content-Type: application/json" \ -d '{ "name": "WallarmConfig", "encrypted": true }' \ "https://apigee.googleapis.com/v1/organizations/<APIGEE_ORG_ID>/environments/\ <APIGEE_ENV>/keyvaluemaps"<APIGEE_ORG_ID>- the Google Cloud project name,<APIGEE_ENV>- the Apigee environment.Alternatively, you can create a KVM at the API proxy or organization level.

-

Add entries to the

WallarmConfigKVM:KVM entry Description Required? node_urlFull domain name of your Wallarm Node including protocol (e.g., https://wallarm-node-instance.com).Yes ignore_errorsDefines error-handling behavior in synchronous traffic analysis when the Node is unavailable (e.g., timeouts): true(default) - requests are forwarded to APIs when the Node is not availablefalse- block requests with status code403when the Node is not available

true, meaning requests are always forwarded to APIs when the Node is unavailable.No Use the following Apigee API call to add entries to the environment-level KVM:

curl -X POST \ -H "Authorization: Bearer $(gcloud auth print-access-token)" \ -H "Content-Type: application/json" \ -d '{ "name": "node_url", "value": "<WALLARM_NODE_URL>" }' \ "https://apigee.googleapis.com/v1/organizations/<APIGEE_ORG_ID>/environments/\ <APIGEE_ENV>/keyvaluemaps/WallarmConfig/entries"Alternatively, you can add entries to a KVM at the API proxy or organization level.

Entries must be created at the same level where the KVM itself was originally created.

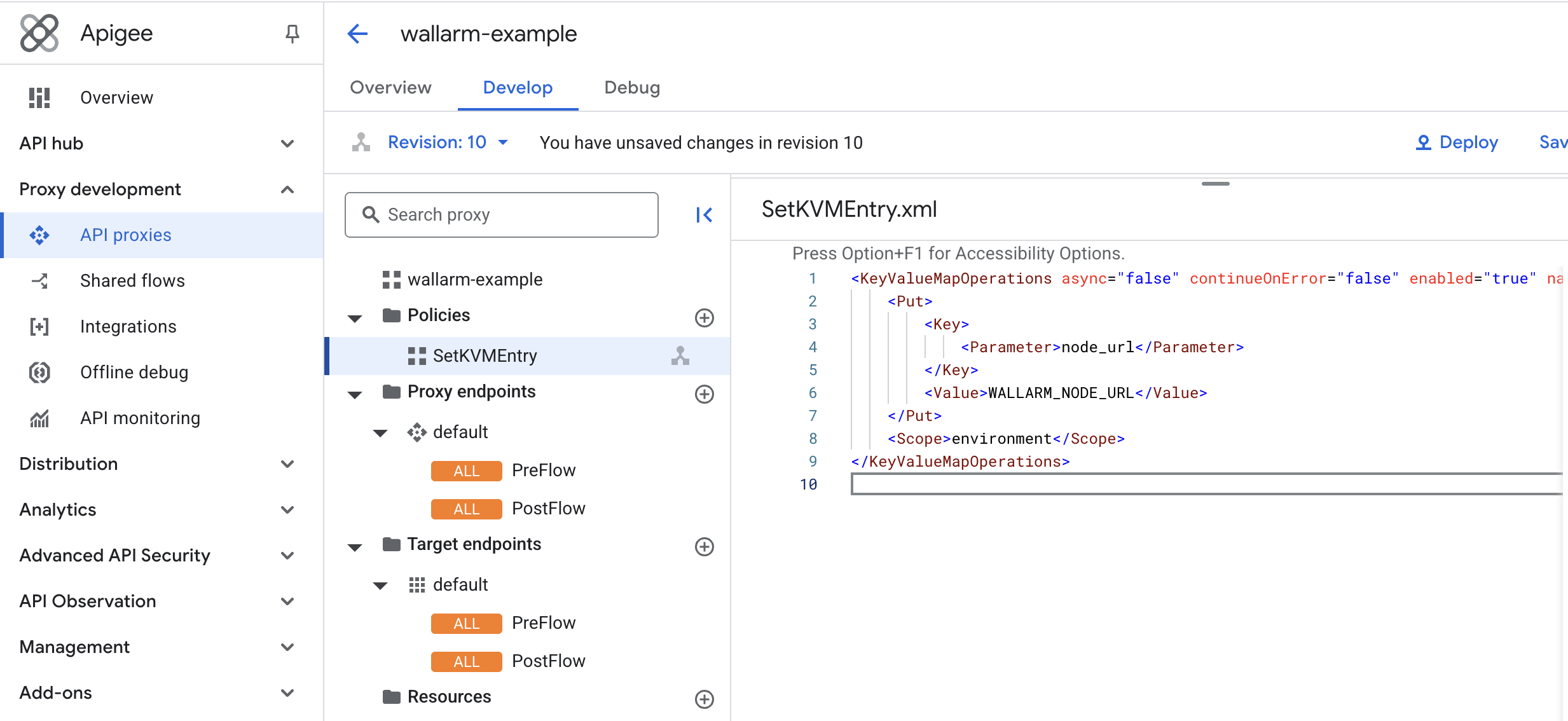

Add entries by creating a

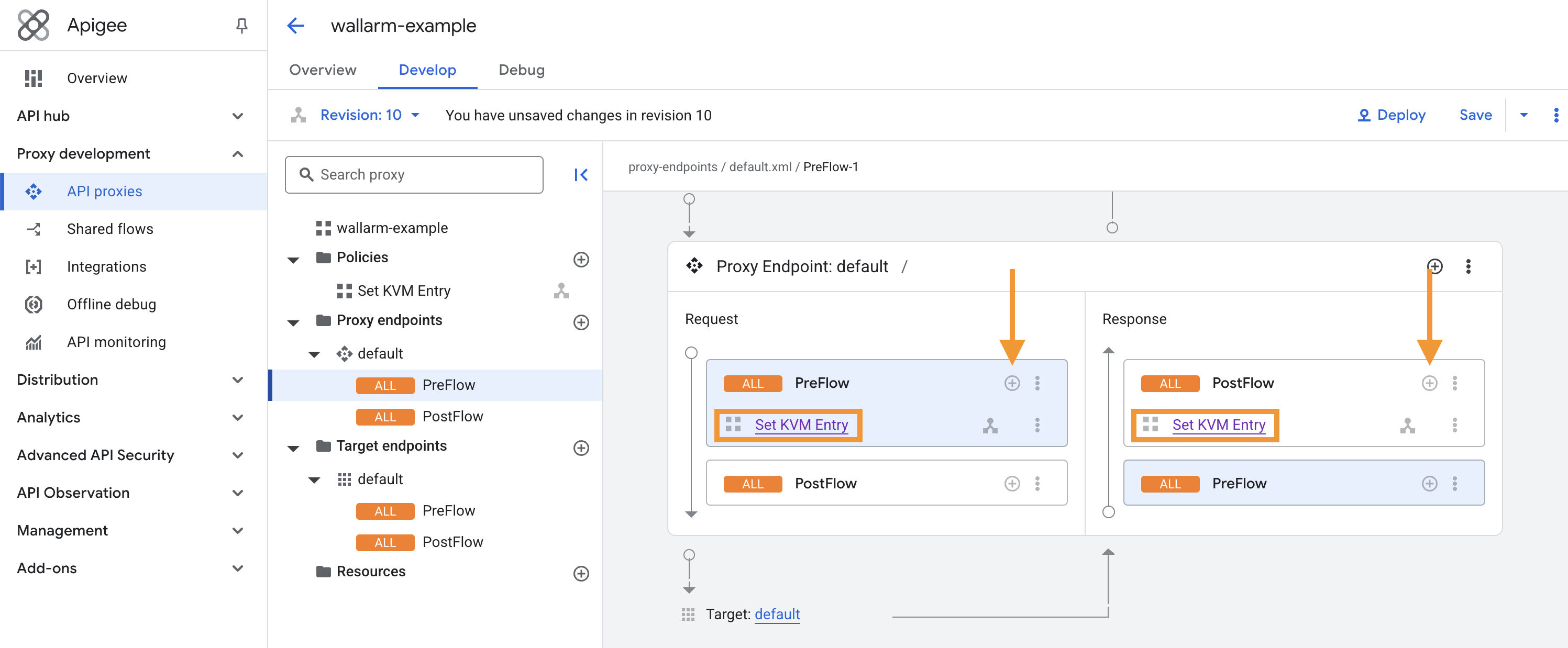

KeyValueMapOperationspolicy inside your API proxy:-

In Google Cloud Console → Proxy development → API proxies → select your API proxy → Policies, Add policy with the following XML:

-

Attach the policy to Request PreFlow and Response PostFlow of the proxy endpoint:

-

Save and Deploy a new API proxy revision.

4. Deploy Wallarm shared flows¶

Each traffic analysis mode (synchronous or asynchronous) requires 2 shared flows: one for requests and one for responses.

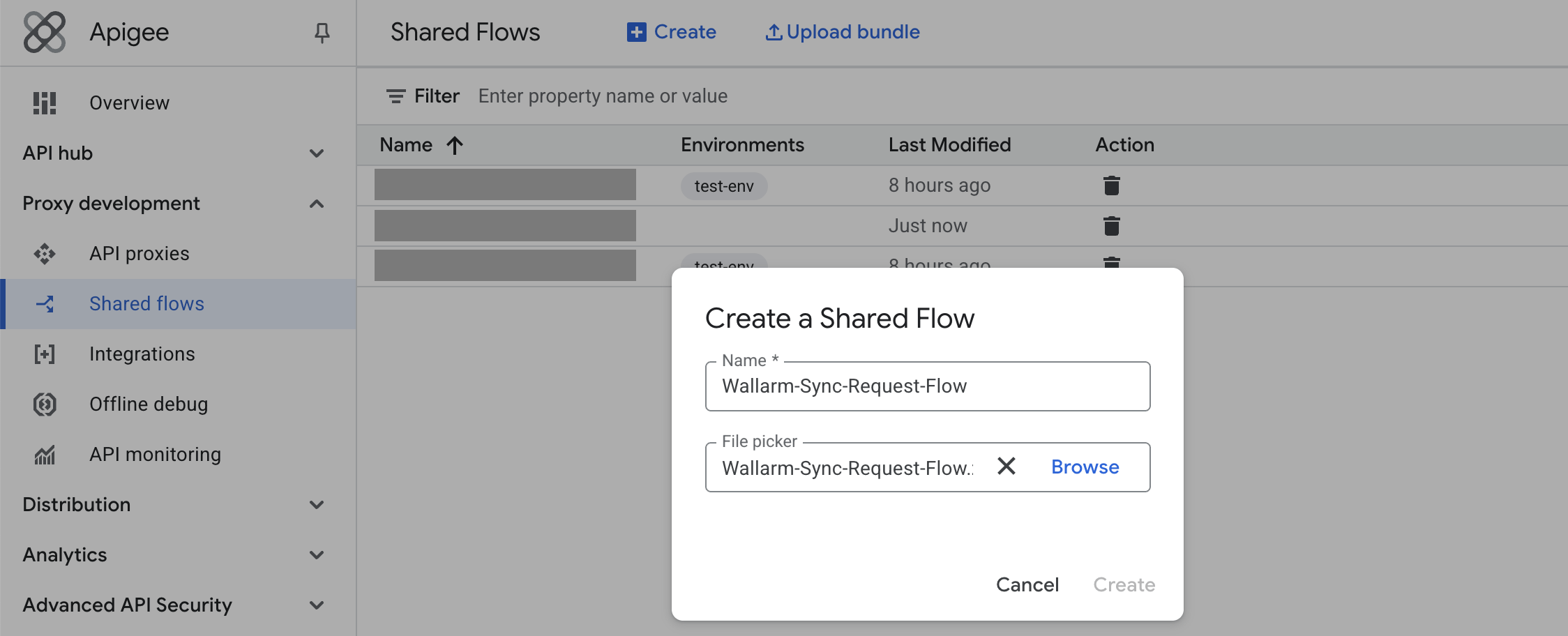

-

In Google Cloud Console → Proxy development → Shared flows, Upload bundle from

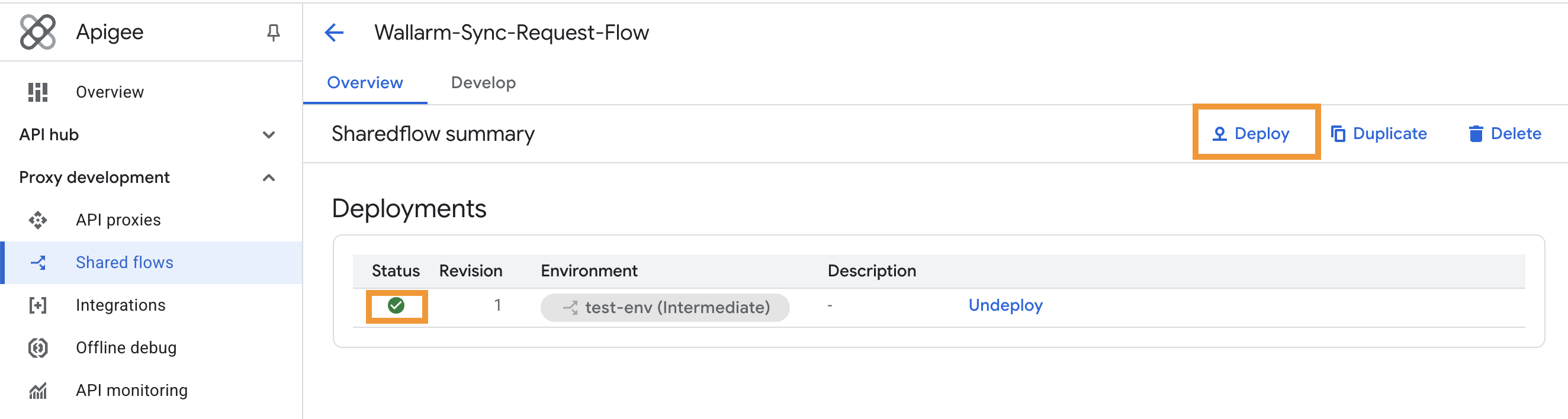

Wallarm-Sync-Request-Flow.zipfor synchronous mode or fromWallarm-Async-Request-Flow.zipfor asynchronous mode. -

Deploy the uploaded flow. Verify that it shows a green "Ok" status for each target environment.

-

In the same section, upload the corresponding response flow archive (

Wallarm-Sync-Response-Flow.ziporWallarm-Async-Response-Flow.zip). -

Deploy the response shared flow.

Alternatively, you can automate this step using the Apigee API.

5. Apply shared flows to your APIs¶

You can apply the Wallarm shared flows globally to all API proxies in an environment, or attach them only to specific API proxies.

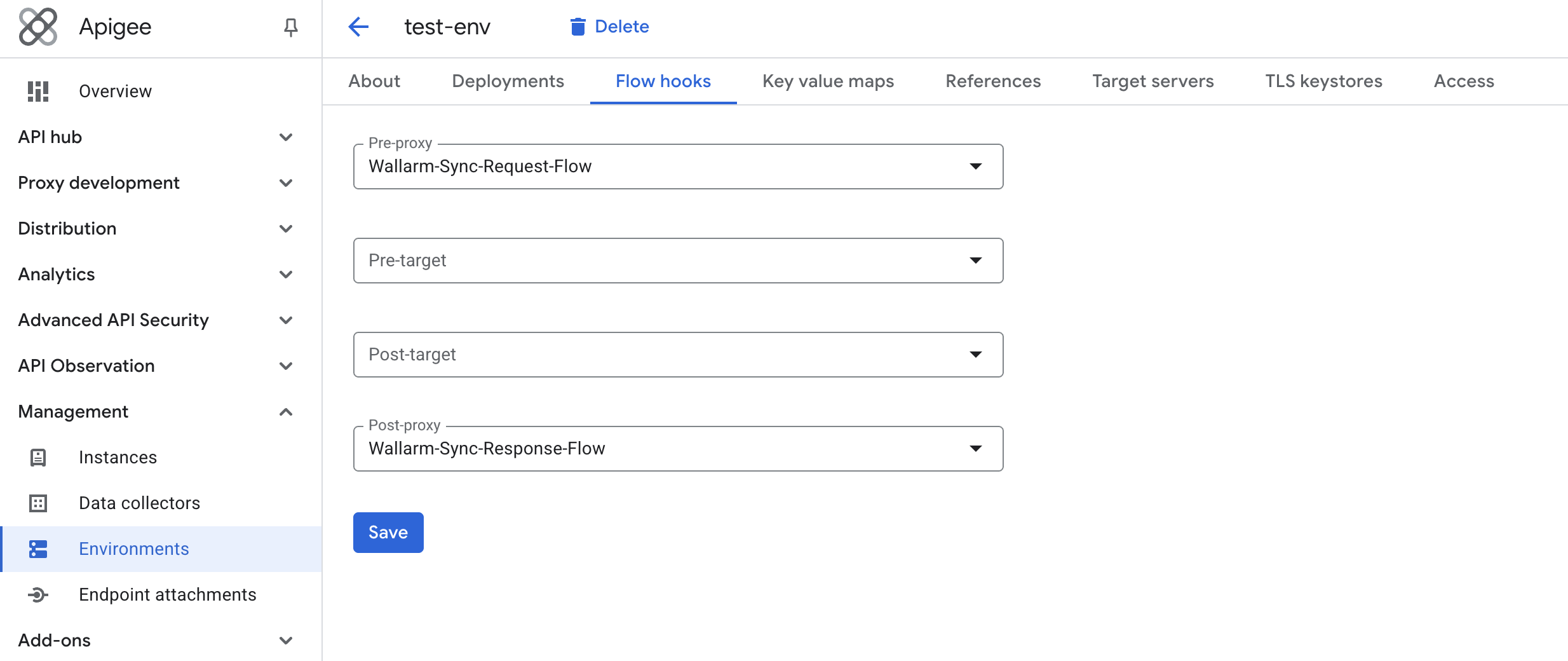

To enable the connector for all proxies in an environment, attach the Wallarm flows as flow hooks:

- Proceed to Google Cloud Console → Management → Environments → select your environment → Flow hooks.

-

Assign the deployed Wallarm flows:

-

Pre-proxy →

Wallarm-Sync-Request-Flowfor synchronous mode orWallarm-Async-Request-Flowfor asynchronous mode.Requests are forwarded (synchronous) or mirrored (asynchronous) to the Wallarm Node for inspection before reaching the API proxy.

-

Post-proxy →

Wallarm-Sync-Response-Flowfor synchronous mode orWallarm-Async-Response-Flowfor asynchronous mode.Responses from the target service are mirrored to the Wallarm Node for inspection.

-

If you already use pre-proxy or post-proxy flow hooks for other policies, you can include the Wallarm flows by referencing them through a FlowCallout policy.

Alternatively, you can automate this step using the Apigee API.

You can attach the Wallarm shared flows only to specific API proxies using the Flow Callout policies:

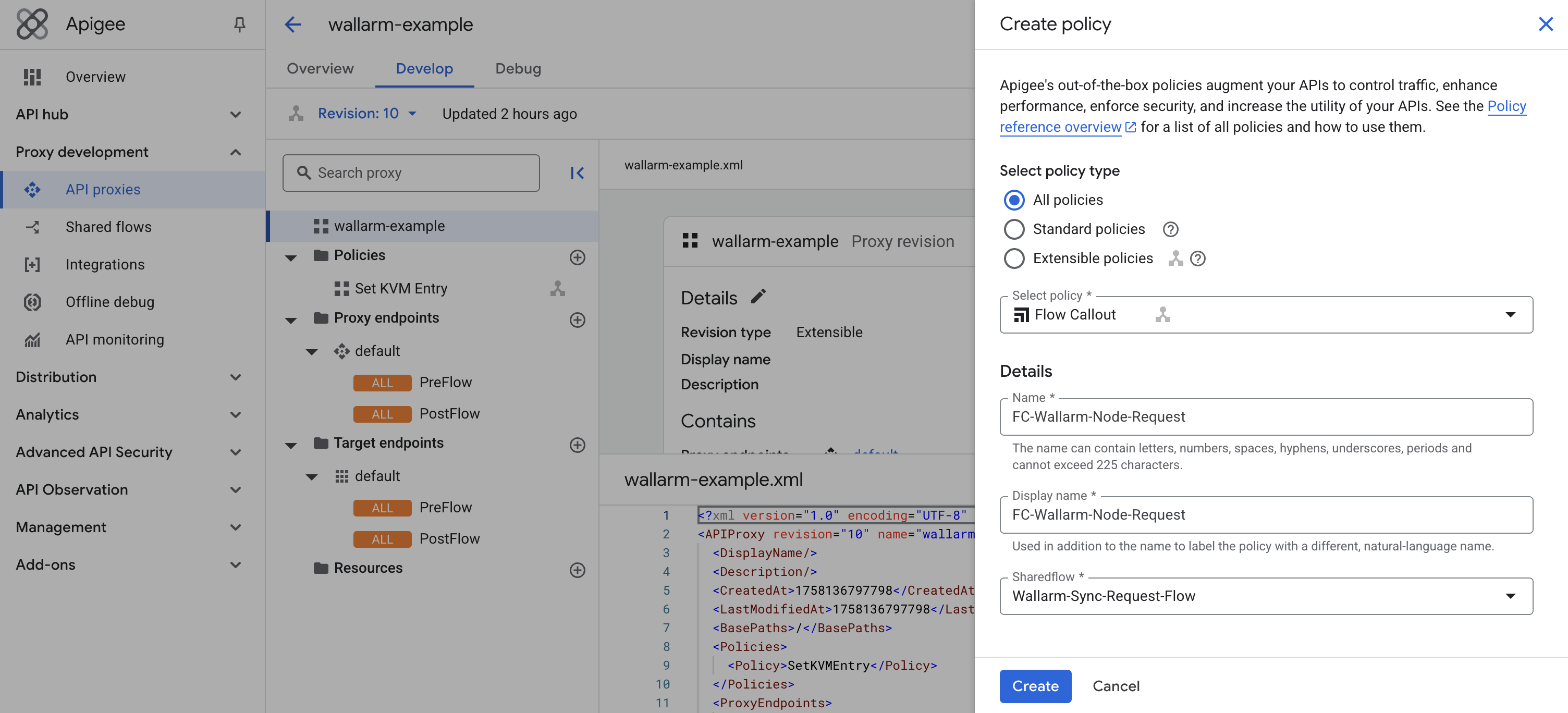

- Proceed to Google Cloud Console → Proxy development → API proxies → select the API proxy to protect → Policies → Add policy.

-

Create the request policy:

- Policy type:

Flow Callout - Name and Display name:

FC-Wallarm-Node-Request - Sharedflow:

Wallarm-Sync-Request-Flowfor synchronous mode orWallarm-Async-Request-Flowfor asynchronous mode

- Policy type:

-

Create the response policy:

- Policy type:

Flow Callout - Name and Display name:

FC-Wallarm-Node-Response - Sharedflow:

Wallarm-Sync-Response-Flowfor synchronous mode orWallarm-Async-Response-Flowfor asynchronous mode

The

FC-Wallarm-Node-Request.xmlandFC-Wallarm-Node-Response.xmlpolicy files are also included in the Wallarm Apigee connector bundle. - Policy type:

-

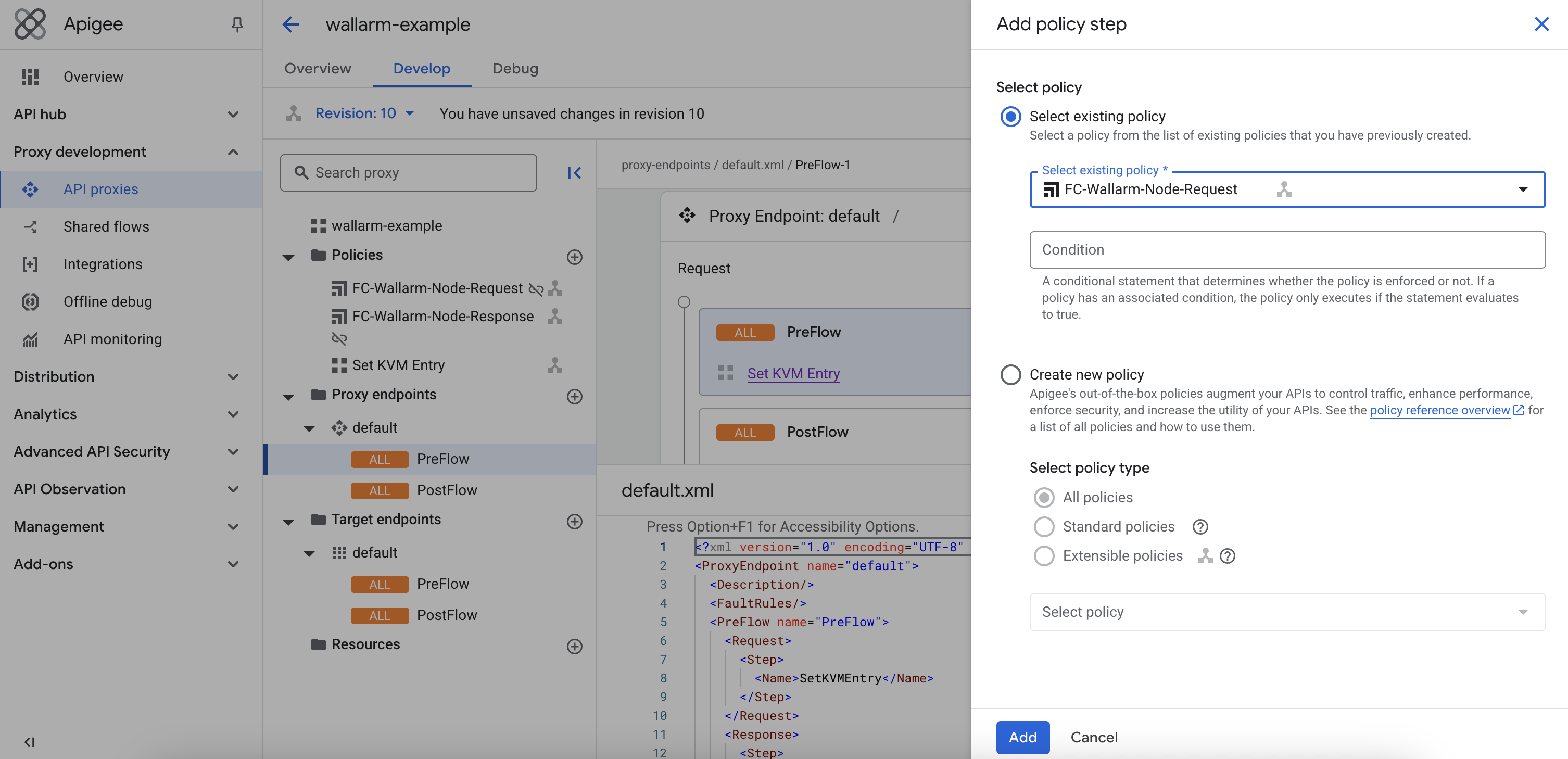

Attach the policies to the proxy flows:

- Request → PreFlow → select

FC-Wallarm-Node-Request - Response → PostFlow → select

FC-Wallarm-Node-Response

- Request → PreFlow → select

-

Add

FC-Wallarm-Node-Responsewith<AlwaysEnforce>true</AlwaysEnforce>to the default fault rule of your proxy.When a proxy returns 4xx/5xx, Apigee skips the

PostFlowby default. Adding the policy to the fault rule ensures the response is still sent to the Wallarm Node. -

Save and Deploy a new API proxy revision.

Testing¶

Test the deployed connector with both legitimate and malicious traffic.

Legitimate traffic¶

-

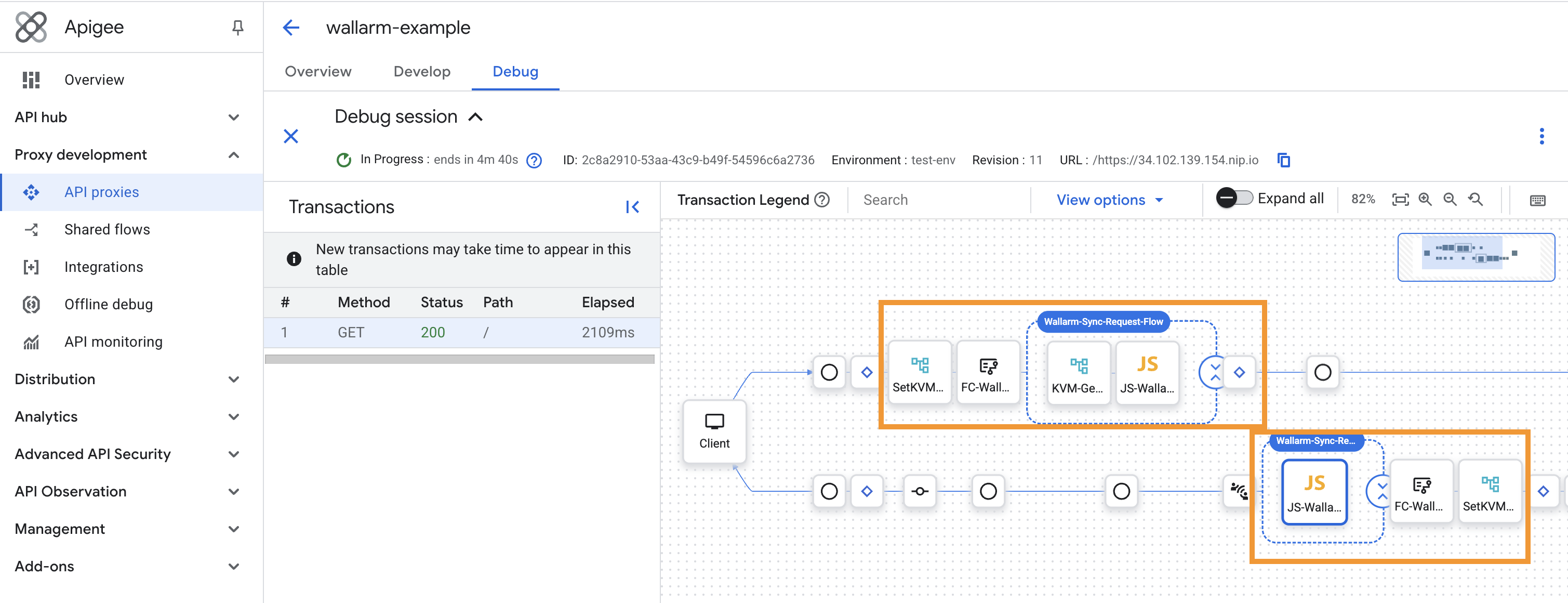

In Google Cloud Console → Proxy development → API proxies → select your API proxy → Debug → Start debug session.

-

Send a legitimate request to the provided URL.

-

Review the transactions in the debug session and confirm that the Wallarm flows are triggered:

If flows are applied at the environment level, the debug view may differ slightly, but

Wallarm-Sync-Request-FlowandWallarm-Sync-Response-Flow(or theirAsynccounterparts) must still appear. -

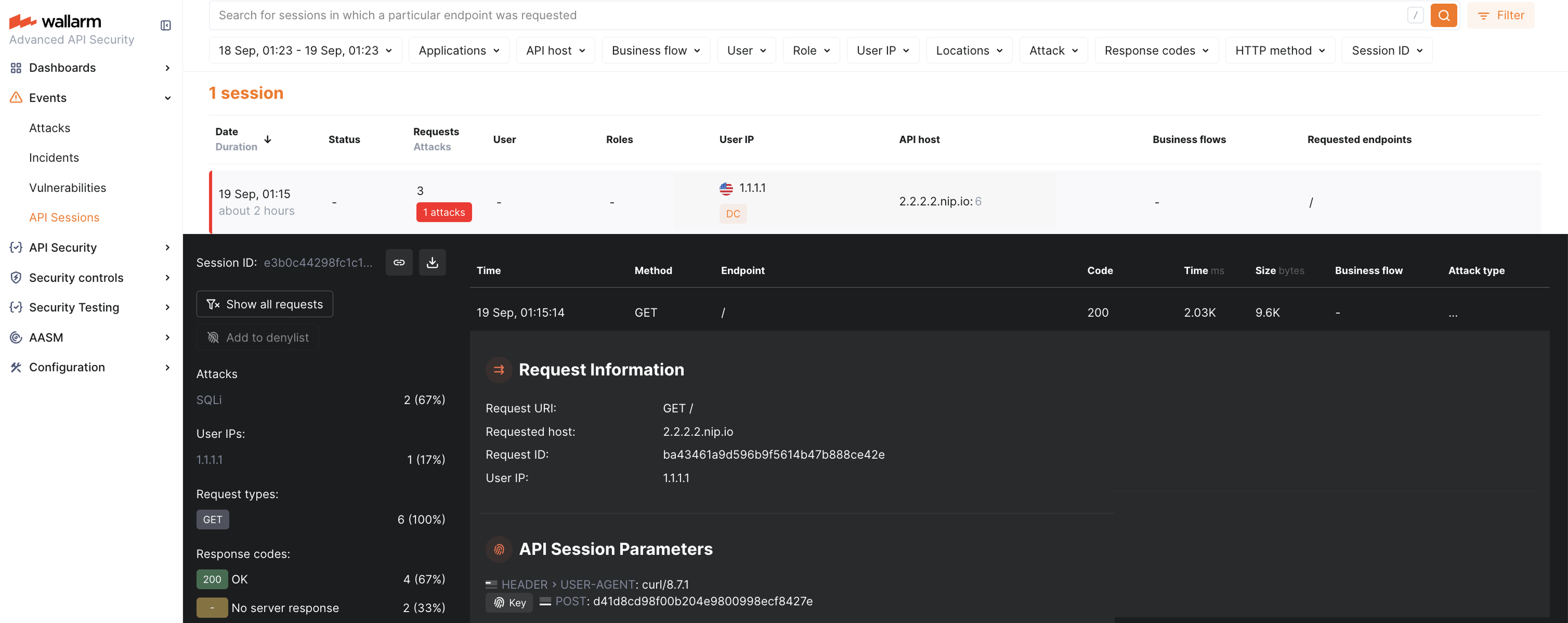

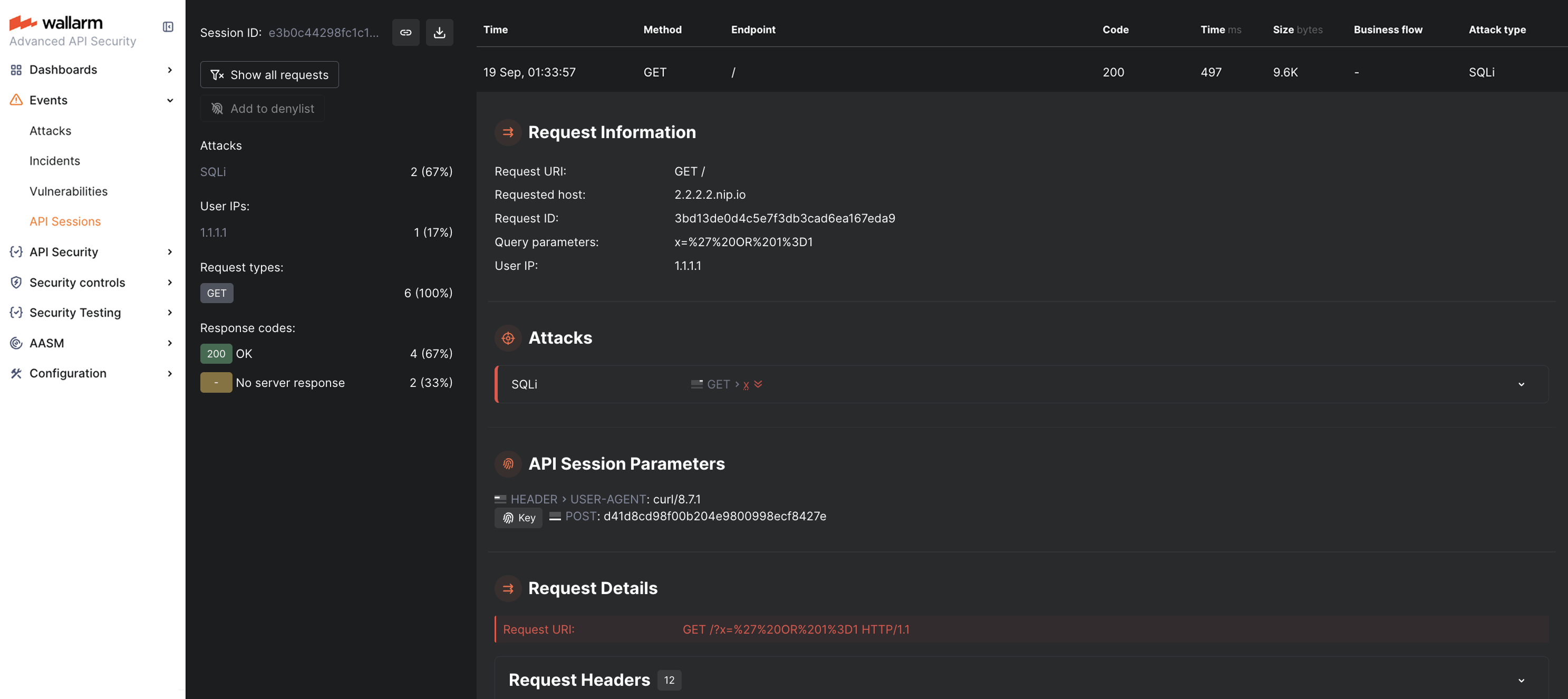

In Wallarm Console → API Sessions, verify that the legitimate request is displayed:

Malicious traffic¶

-

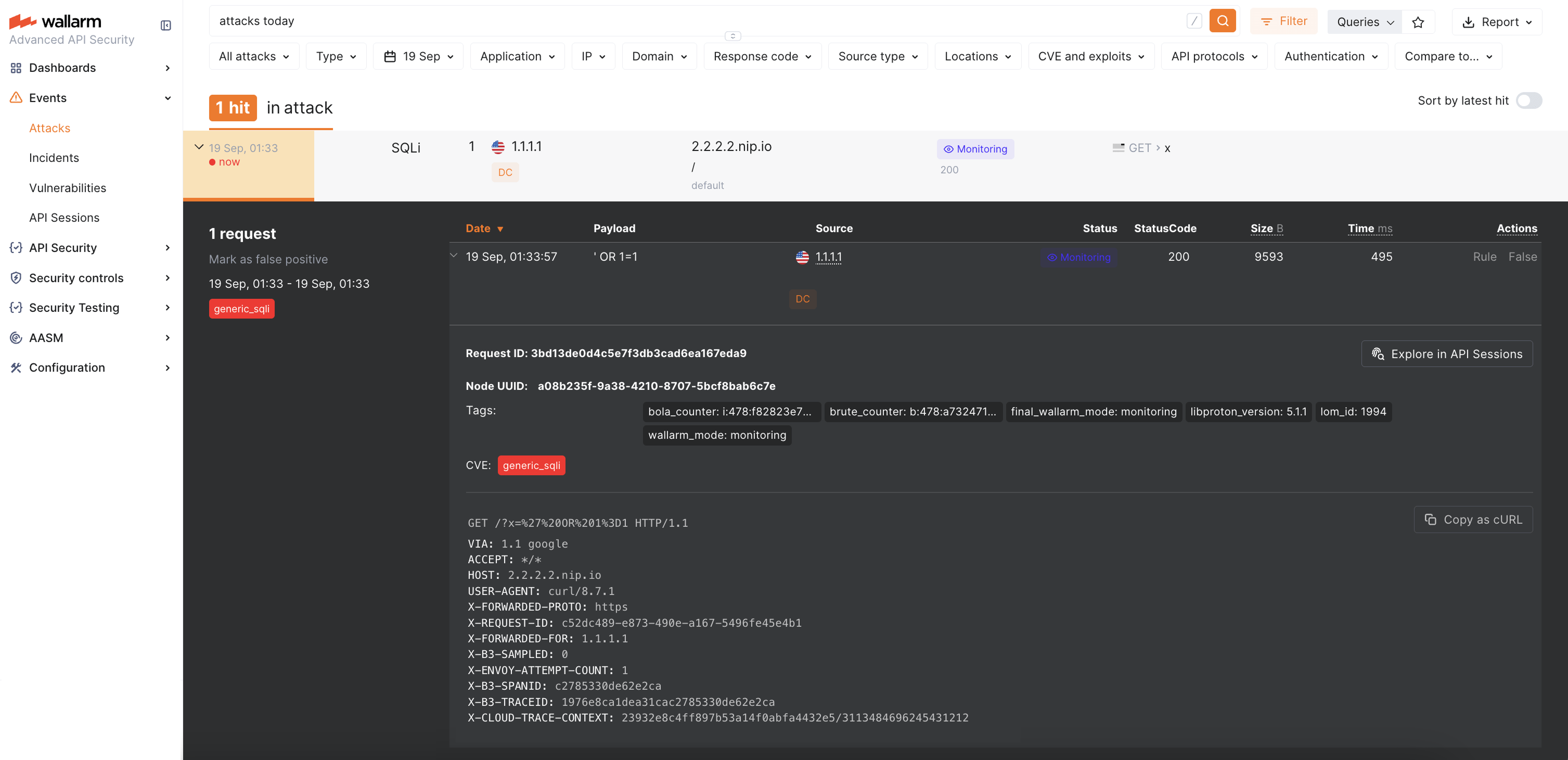

Send a request with a test SQLi attack by adding the query parameter

x='+OR+1=1:- Synchronous mode with blocking enabled: the request is blocked with

403. - Synchronous mode (monitoring): request reaches the API and is logged in Wallarm Console.

- Asynchronous mode: request reaches the API and is logged in Wallarm Console.

- Synchronous mode with blocking enabled: the request is blocked with

-

In Wallarm Console → API Sessions, verify that the malicious request is logged:

-

In Wallarm Console → Attacks, confirm that the attack is listed:

Block page customization¶

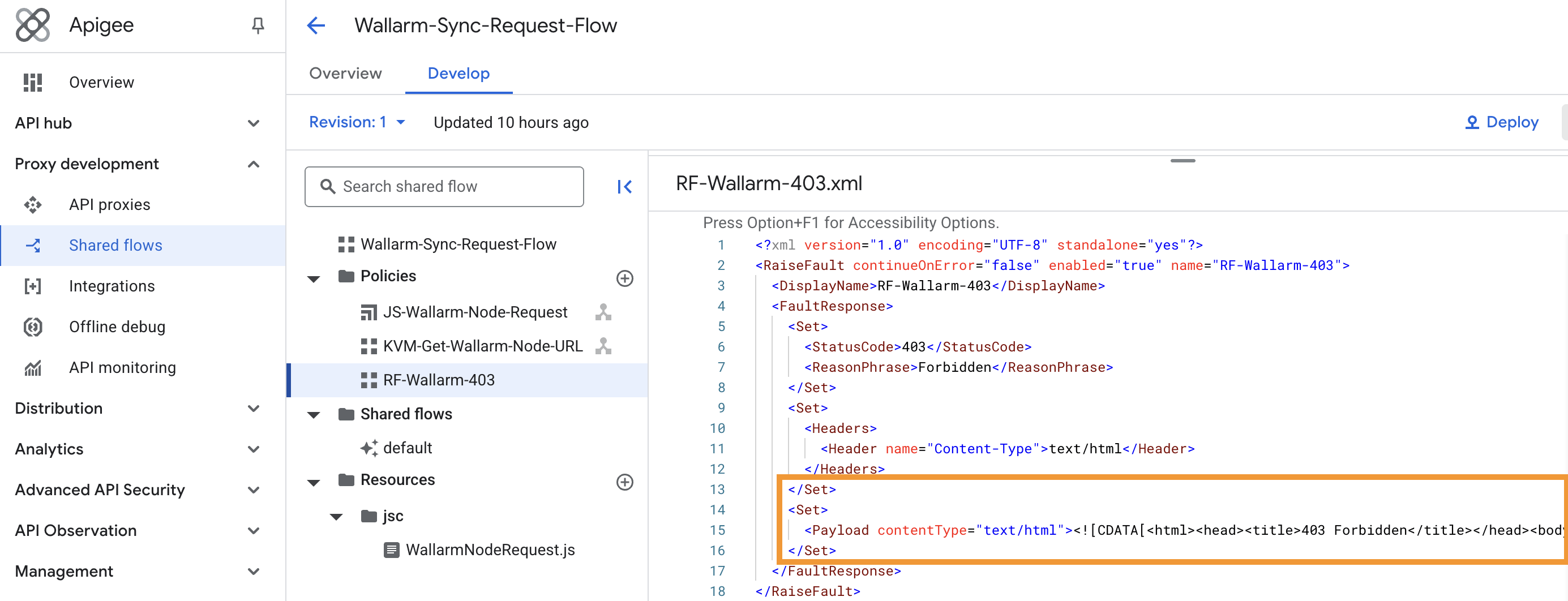

If the Node is deployed in synchronous mode with blocking enabled, you can customize the block page returned for blocked malicious requests:

-

Go to Google Cloud Console → Shared Flows →

Wallarm-Sync-Request-Flow→ Develop. -

Edit the

RF-Wallarm-403RaiseFault policy. This policy defines the error response from Wallarm.Update the content inside the

<FaultResponse><Set><Payload>tag. Make sure the payload is wrapped in CDATA. -

Save and deploy a new flow revision.

Upgrading the policies¶

To upgrade the deployed Wallarm policies to a newer version:

-

Download the updated Apigee connector code bundle from Wallarm.

-

Import the new versions of the shared flows (

Wallarm-Sync-*orWallarm-Async-*) into Apigee, as described in Step 4. -

Deploy the updated shared flows to the required environments.

-

Update your API proxies or environment flow hooks to reference the new flow revisions, as described in Step 5.

-

Test both legitimate and malicious traffic to verify the upgrade.

Policy upgrades may require a Wallarm Node upgrade, especially for major version updates. See the Native Node changelog for the self-hosted Node release notes. Regular node updates are recommended to avoid deprecation and simplify future upgrades.

Uninstalling the policies¶

To remove the Wallarm connector from Apigee API Management:

-

In Google Cloud Console → Proxy development → API proxies, open the proxy where the connector is applied.

-

Remove the

FC-Wallarm-Node-RequestandFC-Wallarm-Node-Responsepolicies from PreFlow, PostFlow, and the Default Fault Rule. -

If deployed at the environment level, remove the Wallarm shared flows from Flow hooks.

-

Delete the Wallarm shared flows (

Wallarm-Sync-*orWallarm-Async-*) from Shared flows. -

Remove the

WallarmConfigKVM or its entries if no longer needed. -

Save and deploy a new revision of the proxy (or updated environment configuration).