Deploying the NGINX Node with AWS AMI¶

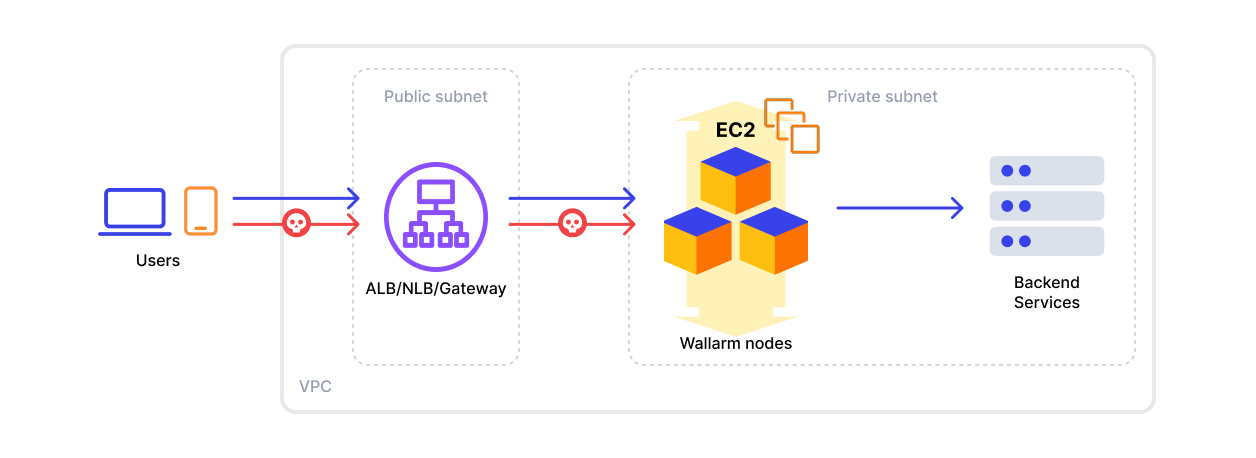

This article provides instructions for deploying the Wallarm NGINX node on AWS in-line using the official Amazon Machine Image (AMI).

The image is based on Debian and the NGINX version provided by Debian. Currently, the latest image uses Debian 12, which includes NGINX stable 1.22.1.

Deploying the Wallarm Node from the AMI on AWS typically takes around 10 minutes.

Security note

This solution is designed to follow AWS security best practices. We recommend avoiding the use of the AWS root account for deployment. Instead, use IAM users or roles with only the necessary permissions.

The deployment process assumes the principle of least privilege, granting only the minimal access required to provision and operate Wallarm components.

For guidance on estimating AWS infrastructure costs for this deployment, see the Cost Guidance for Deploying Wallarm in AWS page.

Use cases¶

Among all supported Wallarm deployment options, AMI is recommended for Wallarm deployment in these use cases:

-

Your existing infrastructure resides on AWS.

-

You aim to deploy a security solution as a separate cloud instance, rather than installing it directly on frontend systems like NGINX.

Requirements¶

-

An AWS account

-

Understanding of AWS EC2, Security Groups

-

Any AWS region of your choice, there are no specific restrictions on the region for the Wallarm node deployment

Wallarm supports both single availability zone (AZ) and multi availability zone deployments. In multi-AZ setups, Wallarm Nodes can be launched in separate availability zones and placed behind a Load Balancer for high availability.

-

Access to the account with the Administrator role in Wallarm Console for the US Cloud or EU Cloud

-

Executing all commands on a Wallarm instance as a superuser (e.g.

root) -

No system user named

wallarmexists

Installation¶

1. Launch a Wallarm Node instance¶

Launch an EC2 instance using the Wallarm NGINX Node AMI.

Recommended configuration:

-

Latest available AMI version

-

Any preferred AWS region

-

EC2 instance type:

t3.medium(for testing) orm4.xlarge(for production), see cost guidance for details -

SSH key pair for accessing the instance

-

Appropriate VPC and subnet based on your infrastructure

-

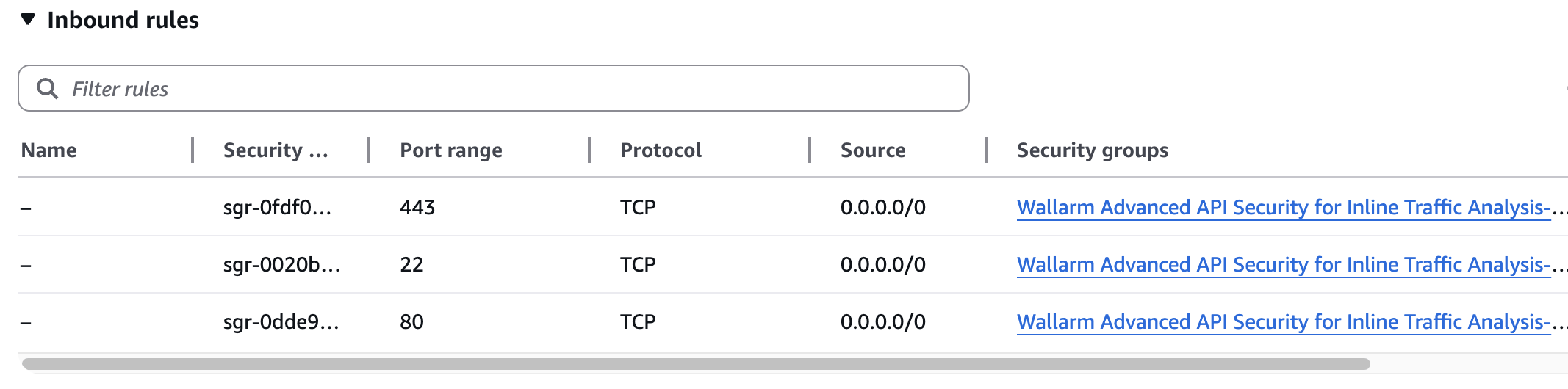

Security Group inbound access to ports 22, 80, and 443

-

Security Group outbound access to:

https://meganode.wallarm.comto download the Wallarm installerhttps://us1.api.wallarm.comfor working with US Wallarm Cloud or tohttps://api.wallarm.comfor working with EU Wallarm Cloud. If access can be configured only via the proxy server, then use the instructions-

IP addresses below for downloading updates to attack detection rules and API specifications, as well as retrieving precise IPs for your allowlisted, denylisted, or graylisted countries, regions, or data centers

2. Connect to the Wallarm Node instance via SSH¶

Use the selected SSH key to connect to your running EC2 instance:

You need to use the admin username to connect to the instance.

3. Generate a token to connect an instance to the Wallarm Cloud¶

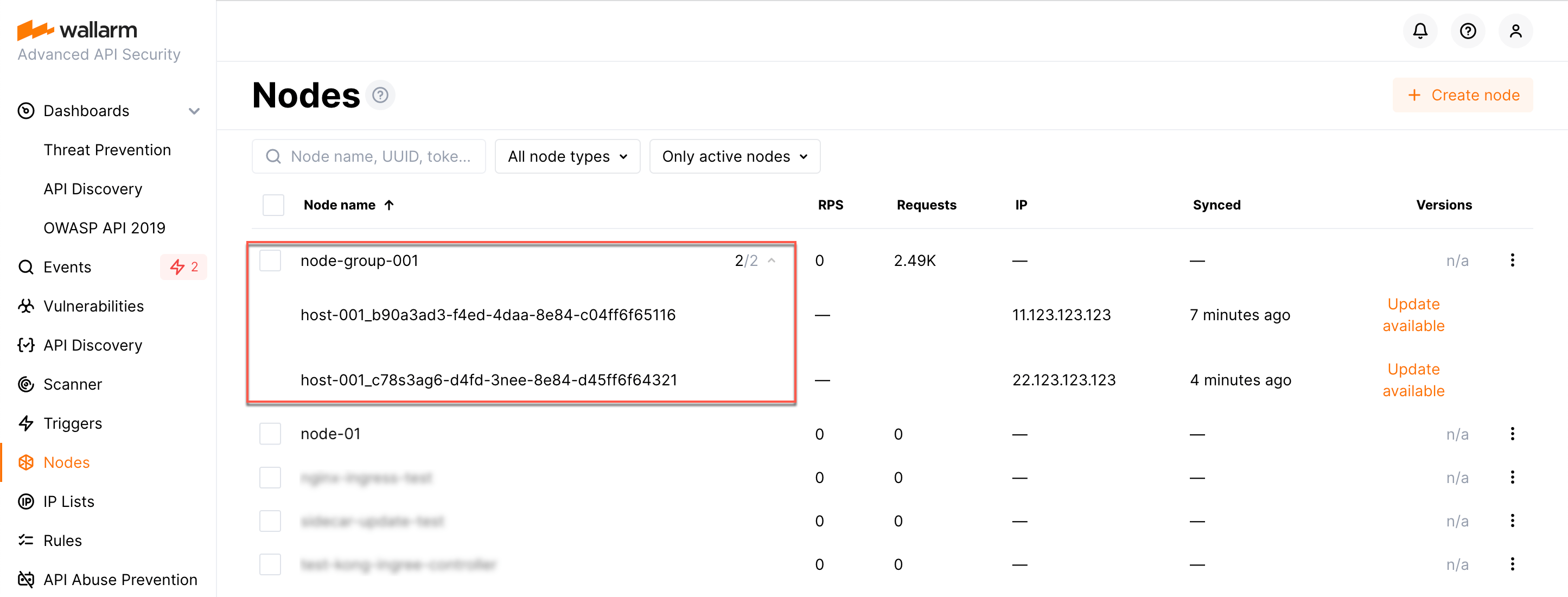

The Wallarm node needs to connect to the Wallarm Cloud using a Wallarm token of the appropriate type. An API token allows you to create a node group in the Wallarm Console UI, helping you organize your node instances more effectively.

Generate a token as follows:

4. Connect the instance to the Wallarm Cloud¶

The instance's node connects to the Wallarm Cloud via the cloud-init.py script. This script registers the node with the Wallarm Cloud using a provided token, globally sets it to the monitoring mode, and sets up the node to forward legitimate traffic based on the --proxy-pass flag.

Run the cloud-init.py script on the instance created from the cloud image as follows:

-

WALLARM_LABELS='group=<GROUP>'sets a node group name (existing, or, if does not exist, it will be created). It is only applied if using an API token. -

<TOKEN>is the copied value of the token. -

<PROXY_ADDRESS>is the address the Wallarm node proxies legitimate traffic to. It can be the IP of an application instance, a load balancer, or a DNS name (depending on your architecture), with the specifiedhttporhttpsprotocol, e.g.,http://example.comorhttps://192.0.2.1. See more information on the proxy address format.

5. Configure sending traffic to the Wallarm instance¶

Update targets of your load balancer to send traffic to the Wallarm instance. For details, please refer to the documentation on your load balancer.

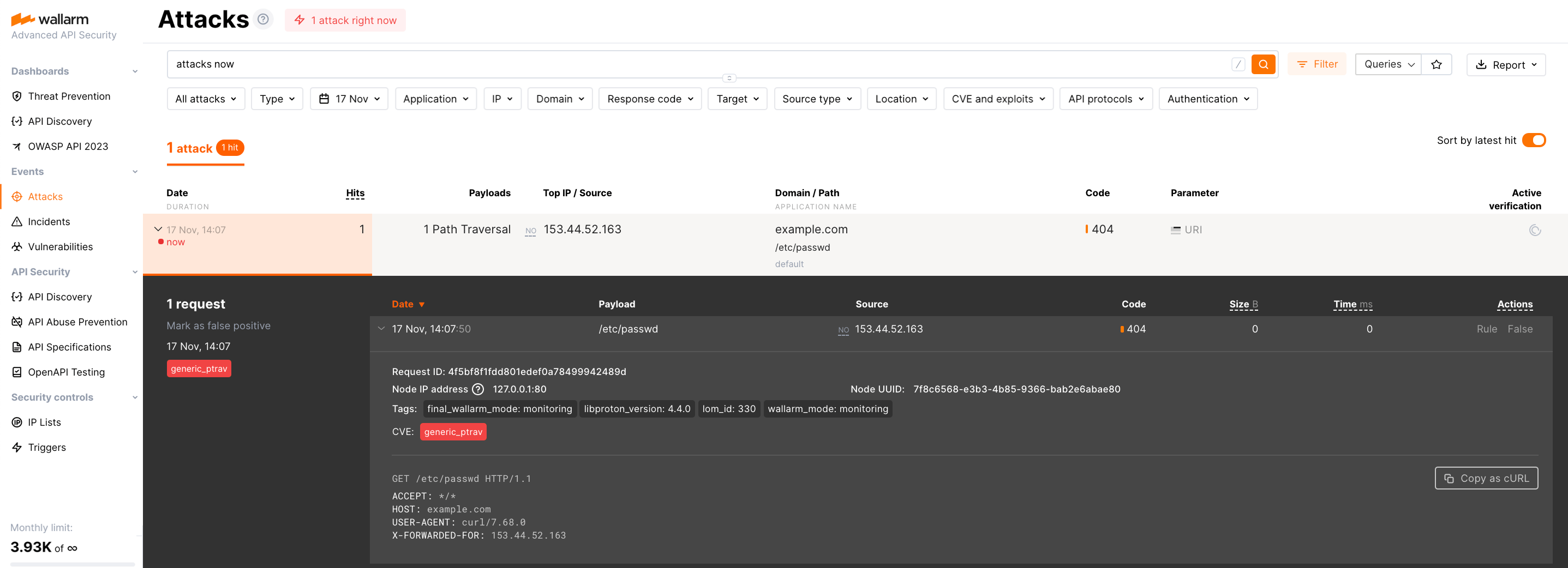

6. Test the Wallarm operation¶

-

The request with test Path Traversal attack to an address of either the load balancer or the machine with the Wallarm node:

-

Open Wallarm Console → Attacks section in the US Cloud or EU Cloud and make sure the attack is displayed in the list.

Since Wallarm operates in the monitoring mode, the Wallarm node does not block the attack but registers it.

-

Optionally, test other aspects of the node functioning.

Verifying the node operation using logs and metrics¶

To verify the node is detecting traffic, you can check the metrics and logs as follows:

-

Check Prometheus metrics exposed by the node:

-

Review NGINX logs to inspect incoming requests and errors:

- Access logs:

/var/log/nginx/access.log - Error logs:

/var/log/nginx/error.log

- Access logs:

-

Review Wallarm-specific logs, which include details such as data sent to the Wallarm Cloud, detected attacks, and more. These logs are located in the

/opt/wallarm/var/log/wallarmdirectory.

Fine-tune the deployed solution¶

The deployment is now complete. The filtering node may require some additional configuration after deployment.

Wallarm settings are defined using the NGINX directives or the Wallarm Console UI. Directives should be set in the following files on the Wallarm instance:

-

/etc/nginx/sites-enabled/defaultdefines the configuration of NGINX -

/etc/nginx/conf.d/wallarm.confdefines the global configuration of Wallarm filtering node -

/etc/nginx/conf.d/wallarm-status.confdefines the filtering node monitoring service configuration -

/opt/wallarm/wstore/wstore.yamlwith the postanalytics service (wstore) settings

You can modify the listed files or create your own configuration files to define the operation of NGINX and Wallarm. It is recommended to create a separate configuration file with the server block for each group of the domains that should be processed in the same way (e.g. example.com.conf). To see detailed information about working with NGINX configuration files, proceed to the official NGINX documentation.

Creating a configuration file

When creating a custom configuration file, make sure that NGINX listens to the incoming connections on the free port.

Below there are a few of the typical settings that you can apply if needed:

To apply the settings, restart NGINX on the Wallarm instance:

Each configuration file change requires NGINX to be restarted to apply it.